- Cash On Delivery + Free Shipping Across India (For all Physical Products)

- Store Location

-

- support@grabnpay.in

- Data Center Networking

- Wireless Networking

- Optical Networking

- Wordpress Hosting

- Blog Hosting

- WooCommerce Hosting

- Kubernetes Playground

- VPS Hosting

- Home

- Nvidia GPUs

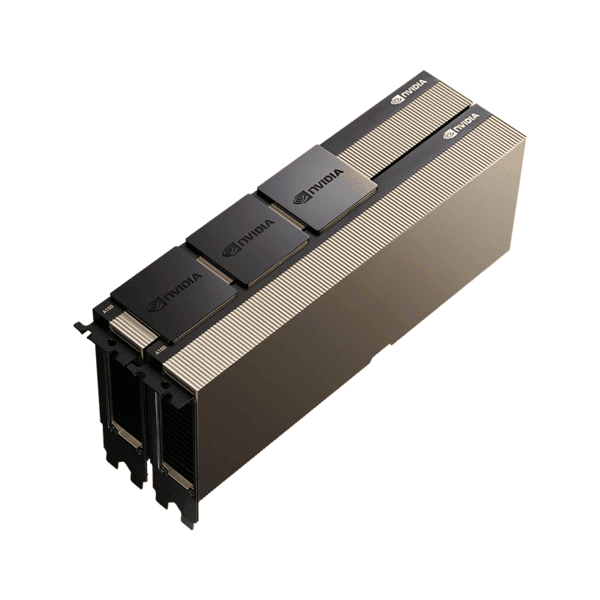

- NVIDIA A100 80GB HBM2e Tensor Core GPU NVIDIA A100 80GB HBM2e Tensor Core GPU

NVIDIA A100 80GB HBM2e Tensor Core GPU

- Description

- Shipping & Returns

- Reviews

NVIDIA A100 Tensor Core GPU offers unparalleled acceleration at any scale, powering the highest-performing elastic data centers worldwide for AI, data analytics, and high-performance computing (HPC) applications. As the driving force of the NVIDIA data center platform, the A100 delivers up to 20X higher performance compared to the previous NVIDIA Volta generation. The A100 can efficiently scale up or be divided into seven isolated GPU instances with Multi-Instance GPU (MIG), providing a unified platform that allows elastic data centers to dynamically adapt to shifting workload demands. NVIDIA's A100 Tensor Core technology supports various math precisions, making it a versatile accelerator suitable for various workloads.

A100 80GB variant doubles the GPU memory and debuts the fastest memory bandwidth in the world at 2 terabytes per second (TB/s), accelerating the time to solution for the largest models and most extensive data sets. A100 is a part of the comprehensive NVIDIA data center solution, which includes components across hardware, networking, software, libraries, and optimized AI models and applications from NGC. Representing the most powerful end-to-end AI and HPC platform for data centers, it enables researchers to achieve real-world results and deploy solutions into production at scale.

TECHNICAL SPECIFICATION

|

Brand |

Mellanox nvidia |

||

|

Type |

Tensor Core GPU |

||

|

Model |

A100 |

||

|

NvLink HGX |

PCIe |

||

|

GPU Architecture |

NVIDIA Ampere |

||

|

Double-Precision Performance |

FP64: 9.7 TFLOPS FP64 Tensor Core: 19.5 TFLOPS |

||

|

Single-Precision Performance |

FP32: 19.5 TFLOPS Tensor Float 32 (TF32): 156 TFLOPS | 312 TFLOPS |

||

|

Half-Precision Performance |

312 TFLOPS | 624 TFLOPS |

||

|

Bfloat16 |

312 TFLOPS | 624 TFLOPS |

||

|

Integer Performance |

INT8: 624 TOPS | 1,248 TOPS |

||

|

GPU Memory |

40GB |

80GB |

80GB |

|

GPU Memory Bandwidth |

1,555 GB/s |

2,039 GB/s |

1,935 GB/s |

|

Error-Correcting Code |

YES |

||

|

Interconnect Interface PCIe Gen4 |

64 GB/sec Third generation NVIDIA NVLink 600 GB/sec** |

||

|

Form Factor |

4/8 SXM GPUs in NVIDIA HGX A100 |

PCIe |

|

|

Multi-Instance GPU (MIG) |

Up to 7 GPU instances |

||

|

Max Power Consumption |

400 W |

300 W |

|

|

Delivered Performance for Top Apps |

100% |

90% |

|

|

Thermal Solution |

Passive |

||

|

Compute APIs |

CUDA, DirectCompute, OpenCL, OpenACC |

||

NVIDIA AMPERE ARCHITECTURE

A100 GPU offers versatile solutions for varying acceleration requirements, whether through MIG partitioning for smaller instances or NVLink connectivity for combining multiple GPUs to accelerate large-scale workloads, from the smallest job to the largest multi-node workload. The versatility of the A100 allows IT managers to maximize the utilization of every GPU in their data center.

THIRD-GENERATION TENSOR CORES

NVIDIA A100 delivers 312 teraFLOPS (TFLOPS) of deep learning performance, offering 20X the Tensor FLOPS for deep learning training and 20X the Tensor TOPS for deep learning inference compared to NVIDIA Volta GPUs.

NEXT-GENERATION NVLINK

NVIDIA NVLink in the A100 delivers 2X higher throughput than the previous generation. When paired with NVIDIA NVSwitch technology, it's possible to interconnect up to 16 A100 GPUs, achieving data transfer speeds of up to 600 gigabytes per second (GB/sec). This setup maximizes application performance within a single server. NVLink connectivity is accessible in A100 SXM GPUs through HGX A100 server boards, as well as in PCIe GPUs via an NVLink Bridge, supporting configurations with up to 2 GPUs.

MULTI-INSTANCE GPU (MIG)

An A100 GPU can be partitioned into up to seven GPU instances, fully isolated at the hardware level with their own high-bandwidth memory, cache, and compute cores. MIG enables developers to access breakthrough acceleration for all their applications, and IT administrators can provide the right-sized GPU acceleration for every task, optimizing utilization and expanding access to every user and application.

HBM2E

With up to 80 gigabytes (GB) of high-bandwidth memory (HBM2e), the A100 achieves a world-first GPU memory bandwidth of over 2TB/sec, along with higher dynamic random-access memory (DRAM) utilization efficiency at 95%. The A100 offers 1.7X higher memory bandwidth than the previous generation.

|

Memory Clock |

1512 MHZ |

|

Memory Type |

HBM2e |

|

Memory Size |

80GB |

|

Memory Bus Width |

5120 bits |

|

Peak Memory Bandwidth |

Upto 1.94 TB/s |

STRUCTURAL SPARSITY

AI networks have millions to billions of parameters, and not all are necessary for accurate predictions. Some can be set to zero, making the models “sparse” without sacrificing accuracy. Tensor Cores in the A100 can deliver up to 2X higher performance for sparse models. While the sparsity feature is more beneficial for AI inference, it can also enhance model training performance.

Suitable For: - NVIDIA A100 Tensor Core GPU stands as the pinnacle offering within NVIDIA's data center platform, catering to deep learning, high-performance computing (HPC), and data analytics needs. Renowned for its capability to accelerate over 1,800 applications, encompassing all prominent deep learning frameworks, the A100 is seamlessly integrated across diverse platforms, spanning from desktop setups to server environments and cloud services.

Order Information

Manufacturers are responsible for warranties, which are based on purchase date and validity. We make sure the products we sell are delivered on time and in their original condition at Grabnpay. Whether it's physical damage or operational issues, our team will help you find a solution. We help you install, configure, and manage your devices. We'll help you file a warranty claim and guide you every step of the way.

|

Product |

Description |

|

Nvidia A100 Tensor Core GPU |

1 Slot Nvidia A100 Tensor Core GPU, NvLink, PCIe |